What is Data Annotation in AI or ML?

Simply providing a computer with massive amounts of data and expecting it to learn to perform a task is not enough. The data has to be presented in such a way that a computer can easily recognize patterns and inferences from the data. This is usually done by adding relevant metadata to a set of data. Any metadata tag used to mark up elements of the dataset is called an annotation over the input. The term data labelling is also used interchangeably with data annotation to refer to the technique of tagging labels in contents available in a range of formats. As such, there is no major difference between data labeling and data annotation, except the style and type of tagging the content or object of interest.

Both are used to create machine learning training data sets depending on the type of AI model development and process of training the algorithms for developing such models. Data annotation is basically the technique of labeling the data so that the machine could understand and memorize the input data using machine learning algorithms. Data labeling, also called data tagging, means to attach some meaning to different types of data in order to train a machine learning model. Labeling identifies a single entity from a set of data.

Why is data annotation important?

With the advancements in deep learning algorithms, computer vision and NLP have greatly evolved and done wonders around the world of AI. This has led many industries to adopt AI smoothly and make efficient use of it in various use cases. But even these Machine learning models require both human and machine intelligence. This is called a human-in-the-loop model, where human judgment is used to continuously improve the performance of a machine learning model. Likewise, the process of data annotation needs humans.

Human-annotated data powers machine learning. When it comes to data annotation, human judgment introduces subjectivity, intent, and clarification. As humans, this is one of the areas where we have an upperhand over the computers since we can better deal with ambiguity, decipher the intent, and many other factors that go into data annotation.

High-quality training data is the lifeblood of computer vision applications. Machine learning is dependent on the quality and quantity of its training data. The importance of quality datasets in machine learning can be summarized by one saying: "garbage in, garbage out."

Thus, Machine learning models are only as good as the data that is used to train them. Properly labelled data guarantees success in all ML projects but even a smallest error in preparing the data for training ML models can be detrimental and disastrous. Data annotation enables AI to reach its full potential. Numerous benefits are coming from AI, and with correct data labelling, we can get the best and most value from it.

As it currently stands, data scientists spend a significant portion of their time preparing data, according to a survey by data science platform Anaconda. Part of that is spent fixing or discarding anomalous/non-standard pieces of data and making sure measurements are accurate. These are vital tasks, given that algorithms rely heavily on understanding patterns in order to make decisions, and that faulty data can translate into biases and poor predictions by AI.

Types of Data Annotation:

We, at Kenzyte, really understand the importance of data annotation and thus provide the industry with pixel perfect annotations.

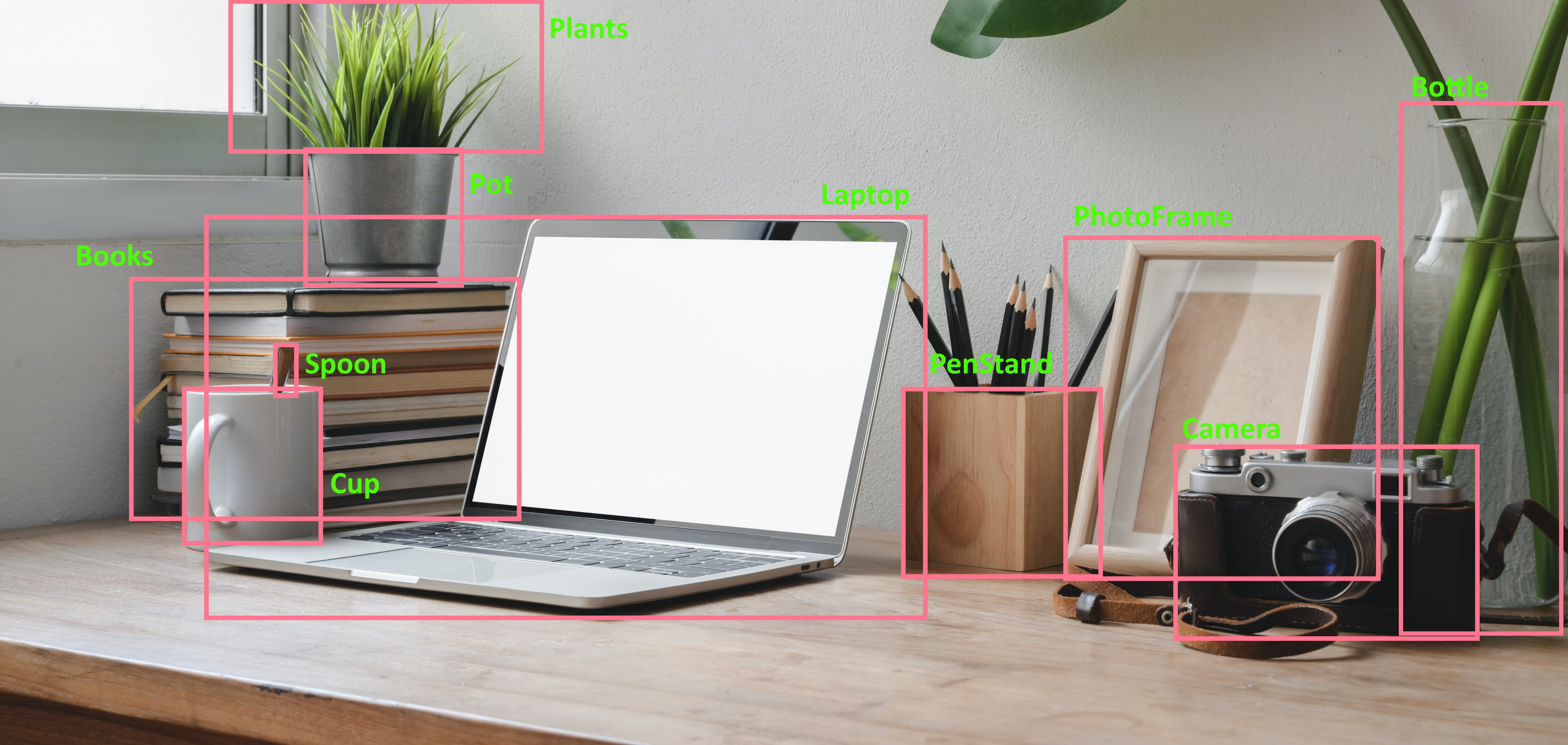

Image Labelling and Annotation:

Here, The elements in an image is labelled and annotated individually. Tagging can vary from objects, animals,background locations and even distortions.Some of the annotation for images includes bounding boxes,3D cuboid annotation polygon annotation, and landmark annotation.

Video Annotation:

It is more or less similar to the image annotation in term of purposes but differs in the fact that video annotation relies on computer vision to recognize moving objects.Objects are localized abd tagged in the similar fashion as in the image annotation but happens in per frame.Video annottaion is of lot of importance and can be utilised for facial recognition, surveillance, self-driving cars and more.

Text Annotation:

It is required to tag sentences,phrases, and texts from articles,social media comments and posts, messages and others based on requirement for processing.Text annotation involves identification of sentence structure, tagging intent, emotion, urgency, and much more for sentiment analysis,chatbot responses and diverse purposes.

Audio Annotation:

It is mainly used in Natural Language processing and Speech recognition.In Audio Annotation sentaces and phrases are tagged with adequate metadata and keywords for optimized processing.From sentiment analysis and virtual assistant responses to voice search optimization, audio annotation is put to a countless number of uses